The Rise of Reasoning AI

The next wave of LLMs have arrived, and a new epoch in artificial intelligence has begun.

But hold on, let’s take a step back. Have you ever wondered what Artificial Intelligence (AI) is or how it was created?

AI is a broad term encompassing various technologies and techniques. It’s typically defined as software that learns from examples and improves performance through feedback instead of direct coding. I discuss this further later in the article and together, we’ll break down the history and evolution of AI.

There are a lot of terms that have been floating around over the years but here’s a somewhat simple, but accurate way to think about the evolution of AI:

- Rules-Based Systems (1950s-1980s): Explicit programming with logical rules and if-then statements to solve specific problems. Simple programming, if you consider punch cards fun 🤪

- Machine Learning (1990s-2000s): Algorithms that learn patterns from data to make predictions without explicit programming. These proliferated into the 2010’s with companies like Amazon making big strides. 😳

- Deep Learning (2010s): Neural networks with multiple layers that can automatically extract complex features from raw data. The foundations of LLMs were born here, actually in 2017 with the introduction of the transformer architecture that powers all modern LLMs! 🤓

- Generative AI (2020-2022): Models that create new content (text, images, music) that wasn’t explicitly in their training data. The first truly impactful LLMs like GPT-3 (2020) defined the generative AI era with their ability to create human-like text across diverse topics and formats. 🧐

- Multimodal Systems (2022-2023): AI that can process and generate across multiple formats (text, images, audio) simultaneously. GPT-4 was a game changer, took LLMs to a new level in 2023 with multimodal capabilities. 🤯

- Reasoning Models (2023-Present): Advanced systems that can think step-by-step, understand causal relationships, and demonstrate more human-like problem-solving abilities. 🫣

Keep this simplified sequence of six steps in mind as you read this article and we traverse back and forward in time together. We’ll get into AGI in another article.

So exactly what are Reasoning AI models? Unlike the first iteration of LLMs who essentially predict the following words in a response, reasoning models use structured “thinking” approaches to break down problems, evaluate multiple possibilities, and generate solutions that demonstrate transparent chains of reasoning, making them particularly effective for tasks requiring careful deliberation such as mathematical problem-solving, logical deduction, and nuanced decision-making where the path to the answer is as important as the answer itself.

How will the rise of reasoning AI translate into savings and profits for your business? Stay tuned, we’ll get there but first, let’s take a look back in history to where it all started and how we arrived at the age of AI reasoning.

Early Foundations of AI (Early 1900s–1950s)

The origins of AI can be traced back to the early 20th century when mathematicians and logicians began exploring the possibility of machine intelligence.

These are the Rules Based Systems mentioned above. 🤪

What began as a theoretical concept discussed among a small circle of academics has evolved into a powerful tool that’s reshaping how businesses operate across every industry.

Theoretical Groundwork

- Philosophers and scientists speculated about mechanical reasoning and artificial intelligence.

- Alan Turing, a British mathematician, introduced the Turing Test in 1950, a method to determine whether a machine could exhibit human-like intelligence.

- Early computing theories laid the foundation for AI, including ideas about algorithms and symbolic logic.

Although no functional AI systems existed at this time, these theoretical contributions set the stage for future developments.

Artificial Intelligence (AI) has transformed from a theoretical concept into a driving force behind technological innovation. What once existed only in science fiction is now an integral part of industries ranging from healthcare to finance. Over the past century, AI has progressed through distinct phases, each contributing to its current capabilities.

Major Strides in AI (1950s–1980s)

The mid-20th century saw rapid advancements in AI research, fueled by the rise of computing technology.

Early AI Programs and Expert Systems

- John McCarthy coined the term Artificial Intelligence; in 1956 and developed the first AI programming language, LISP.

- Researchers created early AI programs capable of solving algebra problems and playing games like chess.

- Expert systems emerged, using rule-based programming to mimic human decision-making in fields such as medicine and engineering.

- SAINT (1961): James Slagle’s Symbolic Automatic INTegrator demonstrated the ability to solve calculus problems at college freshman level.

- ANALOGY (1963): Thomas Evans developed a program that could solve geometric analogy problems from IQ tests.

- SHRDLU (1968-1970): Terry Winograd’s natural language understanding system demonstrated contextual comprehension in a blocks world.

- Stanford Research Institute Problem Solver (1969): First general-purpose autonomous mobile robot combining perception, reasoning, and action.

- First International Joint Conference on Artificial Intelligence (1969): Established AI as a formal discipline with regular conferences.

Despite early successes, AI faced setbacks: starting in the early 1970’s

- Lighthill Report (1973): James Lighthill’s critical assessment of AI progress for the British government, questioning fundamental capabilities and triggering funding reductions.

- Speech Understanding Research Program Cancellation (1974): DARPA terminated major speech recognition funding after failing to meet ambitious goals.

- Systems struggled with real-world complexity and uncertainty.

- High expectations led to disappointment when AI failed to deliver human-like reasoning.

That initial optimism in AI was followed by a growing recognition of fundamental limitations in computational power, knowledge representation, and theoretical frameworks that would ultimately contribute to the AI Winter.

The AI Winter

There have been two major AI Winters commonly recognized in the field’s history:

- The First AI Winter (mid-1970s to early 1980s): Triggered largely by the 1973 Lighthill Report in the UK and similar reassessments in the US, which criticized AI’s failure to meet ambitious goals. DARPA and other government agencies dramatically reduced funding for AI research during this period, particularly for natural language processing (NLP) and machine translation projects.

- The Second AI Winter (late 1980s to early 1990s): This followed the collapse of the commercial expert systems market. After significant business investment in AI technologies through the early and mid-1980s, companies found that expert systems were expensive to maintain, difficult to scale, and often failed to deliver expected returns on investment. The fall of the Lisp machine market also contributed to this downturn.

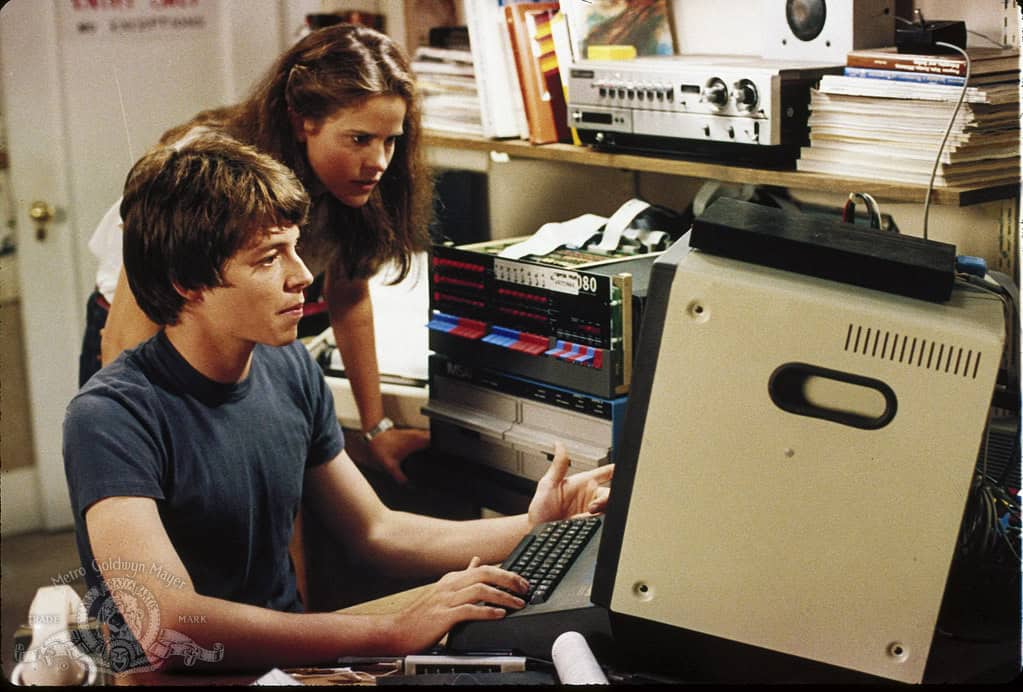

“War Games,” released in 1983, emerged during a pivotal moment in AI history—just before the onset of the second AI Winter but amid growing public fascination with computer technology.

Released during the brief commercial AI boom of the early 1980s when expert systems were being widely adopted by corporations, “War Games” ironically helped popularize the concept of intelligent machines just before investors and government funding agencies would dramatically scale back AI research support in the late 1980s, plunging the field into its second major winter.

The term “AI Winter” was coined by analogy to the concept of “nuclear winter” and first entered the lexicon of the AI research community in 1984. It was prominently used at the American Association of Artificial Intelligence (AAAI) conference that year, specifically during a panel discussion organized by Roger Schank and Marvin Minsky titled “The AI Business.”

Ironically, the term came into popular usage just as the field was about to experience its second major funding collapse in the late 1980s, following the earlier downturn of the mid-1970s. By naming and discussing the phenomenon, researchers were attempting to prevent exactly what would soon unfold – though their efforts were unsuccessful in the short term.

Advancements in AI (1990s–2010s)

The resurgence of AI in the 1990s was driven by improvements in computational power and the availability of large datasets. The 1990s was a key turning point for machine learning. This decade saw ML transition from primarily theoretical work into practical applications that demonstrated real-world value.

These are the Machine Learning Systems mentioned at the top the article. 😳

Several key developments during the 1990s helped establish machine learning as a distinct and valuable discipline:

- Practical applications of machine learning in areas like spam filtering, recommendation systems, and fraud detection that demonstrated clear commercial value

- Web search engines like AltaVista and early Google (founded 1998) began using machine learning techniques to improve search results.

- Early recommendation systems emerged on websites like Amazon (launched 1995), which began suggesting products based on customer purchase history.

- Handwriting recognition became practical and was implemented in the Apple Newton (1993) and Palm Pilot devices, allowing people to write naturally on screens.

- Voice recognition systems improved dramatically, with Dragon NaturallySpeaking (1997) becoming the first continuous speech recognition product available to consumers.

- IBM’s Deep Blue defeated chess grandmaster Garry Kasparov in 1997, demonstrating AI’s growing capabilities.

These applications represented the first wave of ML technology that the average person interacted with regularly, often without realizing the underlying technological advancements making them possible.

Recent AI Developments (2010s–Present)

The past decade has seen AI reach yet another level of sophistication, driven by deep learning and advancements in large-scale neural networks.

In the early 2010s, AI took a dramatic leap forward when researchers started using “deep learning” – teaching computers by showing them millions of examples rather than programming explicit rules. Around 2012, this approach suddenly started working incredibly well for image recognition, with neural networks (systems inspired by human brain connections) becoming deeper and more powerful as computing chips got faster.

Amazon’s ascent to becoming one of history’s most valuable companies can be significantly attributed to its pioneering use of machine learning. Beginning in the late 1990s, when ML was still in its commercial infancy, Amazon deployed recommendation algorithms that fundamentally changed retail by creating personalized shopping experiences at unprecedented scale.

These systems analyzed billions of customer interactions to suggest products with remarkable accuracy, transforming the “people who bought this also bought” feature from a novelty into a revenue-generating powerhouse that drove nearly 35% of all Amazon sales.

As the company expanded, machine learning became embedded in virtually every aspect of its operations—from demand forecasting that revolutionized inventory management to dynamic pricing systems that optimized profitability across millions of products simultaneously.

Perhaps most importantly, Amazon recognized ML’s transformative potential earlier than competitors and built its entire infrastructure to leverage data at scale, allowing it to create a flywheel effect where better recommendations led to more purchases, generating more data to further improve its algorithms.

This data-driven approach eventually enabled Amazon to expand beyond retail into cloud computing with AWS, which itself became the backbone for countless other companies’ ML initiatives, effectively allowing Amazon to profit from the entire machine learning revolution while simultaneously using these same technologies to continuously reinvent itself.

And of course, Jeff Bezo’s built a half a billion dollar Yacht that required the Koningshavenbrug De Hef Bridge to be dismantled for its launch. Not sure if that ever happened but you can google it. Let’s stay focused people.

AI has fundamentally reshaped industries at a rapid pace and It represents the pinnacle of decades of technological advancements aimed at improving efficiency.

How Large Language Models (LLMs) were born

The real revolution for language AI began around 2017-2018. Instead of just recognizing patterns in images, researchers figured out how to make neural networks understand and generate human text.

OpenAI, a research lab founded in 2015, played a key role with their GPT (Generative Pre-trained Transformer) models. The first GPT in 2018 was impressive but limited. By 2020, GPT-3 shocked everyone with its ability to write essays, stories, and even code that seemed surprisingly human-like. This happened because these systems were trained on massive amounts of text from the internet – basically reading billions of web pages to learn patterns of language.

Unlike the specialized AI tools from the 1990s that could only do one specific task, these new Large Language Models (LLMs) could handle almost any language task you threw at them. Each new version got dramatically better.

GPT-4

GPT-4 was released by OpenAI on March 14, 2023, and it represented a significant leap forward in AI capabilities that many consider truly game-changing.

What made GPT-4 revolutionary was its dramatically improved reasoning abilities. While earlier models could generate convincing text, GPT-4 demonstrated a much deeper understanding of complex topics and could solve multi-step problems that previous models struggled with. Its performance on standardized tests was remarkable – scoring in the 90th percentile on the Uniform Bar Exam and achieving a 5 (the highest score) on several AP exams.

That was less than 2 years ago from the time this article is being written! What made this evolution especially revolutionary was how quickly these systems went from research curiosities to tools that millions of people use daily for writing, learning, and creating – transforming our relationship with technology in just a couple of years.

Perhaps most importantly, GPT-4 crossed a threshold where it became genuinely useful for professionals across various fields – from programmers using it to debug code to doctors consulting it for medical literature reviews. Its improved reliability and reduced tendency to “hallucinate” incorrect information made it trustworthy enough for serious applications, triggering widespread adoption and integration into workflows across industries.

The Rise of Reasoning AI

Today, a new wave of AI models is emerging, emphasizing reasoning and problem-solving rather than just pattern recognition. This is the Rise of Reasoning AI.

This remarkable evolution is unfolding heralded by new generation of reasoning AI models that transform how we interact with technology.

Unlike their predecessors that primarily excelled at pattern recognition, models like ChatGPT o1, DeepSeek-v3 r1, Gemini 2.0 Flash Thinking, Claude 3.7 Sonnet, and Grok 3 are designed to tackle complex problems through methodical, step-by-step reasoning processes.

These sophisticated systems bring a refreshing transparency to AI interactions by demonstrating how they examine assumptions, carefully weigh different options, and even correct themselves when necessary. This evolution marks a significant shift in how we work with AI—moving from black box solutions to collaborative thought partnerships where humans can follow the AI’s reasoning path.

For businesses, this advancement opens exciting possibilities: structured reasoning that supports clearer decision-making, detailed AI-driven insights that can refine strategic approaches and reduce inefficiencies, and perhaps most importantly, explainable reasoning that makes it easier to identify potential biases and continuously improve accuracy. As reasoning AI continues to develop, it promises to become not just a more powerful tool, but a more thoughtful companion in navigating complex challenges.

AI has come a long way from its early theoretical roots to becoming a fundamental part of modern industries. The shift from rule-based systems to machine learning and deep learning to generative AI has propelled AI into new areas of innovation.

With the rise of reasoning AI, businesses now have access to AI systems that not only provide answers but also explain their thought processes. As AI technology advances, companies that embrace AI-driven solutions will gain a competitive edge, reducing costs and increasing efficiency.

Contact us here for more information on how we can help you accelerate your business with AI.

Article Sources

- Powerful AI for Business in 2025, January 2024, PaleoTech AI

- The State of AI in 2023: Generative AI’s, December 2023, McKinsey

- What is reasoning?, 2025, MoveWorks

- History of artificial intelligence (AI), 2025, Britannica

- The history of AI, 2025, IBM